More than a year ago, we published a blog post discussing the effectiveness of using GitHub Copilot in combination with Sigasi (see original post). Since then, we’ve integrated our own AI tool, SAL (Sigasi AI layer), into Sigasi® Visual HDL™ (SVH™), making it a great time to revisit the topic. In this article, we used SAL in combination with various language models to evaluate its strengths and weaknesses.

Setup and Installation

Before using SAL’s functionalities, the first step is to configure a model. Users can choose between two types: remote OpenAI models or local models using LM Studio for security-minded users. See this manual page for a more detailed guide on configuring these models.

Once you’ve set up the model, SAL offers two main functionalities:

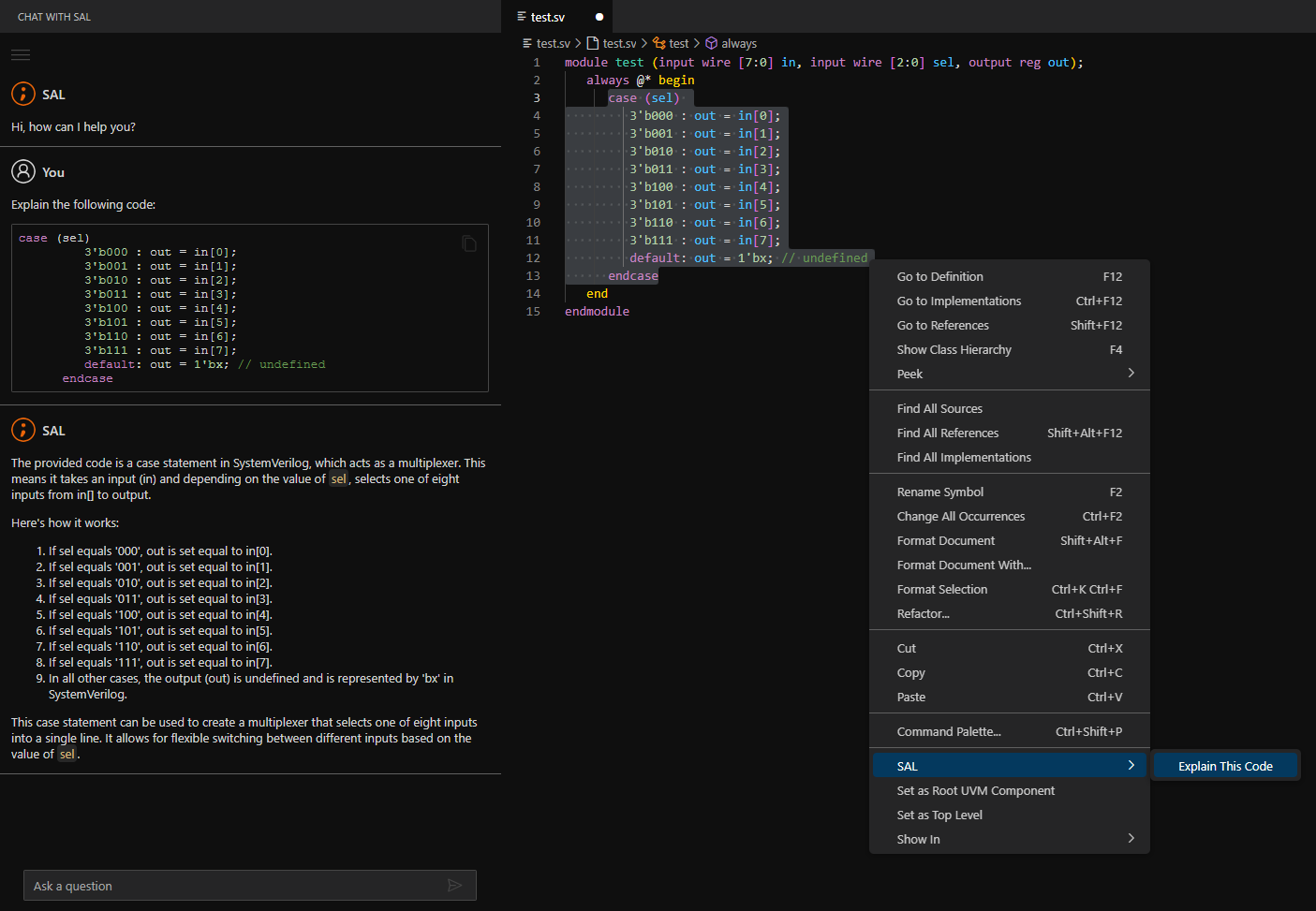

- Conversational Interaction: You can chat with SAL by pressing the SAL icon

. This will show you a familiar chat interface.

- Code Explanation: You can ask SAL to explain part of your code by selecting the given code, right-clicking on it, navigating to

SAL, and then clicking theExplain This Codeoption.

Models

In contrast to Github’s Copilot, SAL lets us explore various language models. Different models share common problems, though some are more prone to specific issues. For this article, we evaluated three models: GPT-4o , deepseek-coder-6.7b-instruct Q4_O , and deepseek-coder-6.7b-instruct Q8_O .

- GPT-4o: This is the latest version of the well-known GPT language family. It is designed for a broad range of applications beyond just coding, and we ran the model remotely. GPT-4o demonstrated a relatively good performance in HDL code generation. However, there was a significant disparity in the quality of generated SystemVerilog code compared to VHDL code. Where the SystemVerilog code was mostly of good quality when straightforward prompts were given, the VHDL code often contained problems.

- deepseek-coder-6.7b-instruct Q4_O: This is a model of the deepseek coder family, trained mostly with code. We ran this model locally. This specific version has a low quantization quality, so despite its coding specialization, the quality of generated VHDL and SystemVerilog code are both quite poor.

- deepseek-coder-6.7b-instruct Q8_O: This model is the higher quantization version of the Q4_O model above. Again, we ran this model locally. When we used well-thought out prompts, the results were great for both HDLs. This model consistently generated the best code compared to the other two models.

In addition to code quality, speed and security are crucial factors to consider with regard to genAI. In this context, there’s a significant distinction between local and remote models. With a decent internet connection, any computer can generate code at the same rate using remote models. In contrast, the speed of local models depends on the given hardware’s capabilities. Therefore, GPT-4o might be a better option than the Q8_O model if your hardware is not powerful enough. On the other hand, and to make things more complicated, remote models may not always be viable due to security concerns.

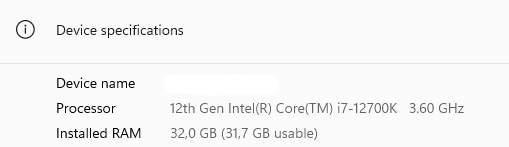

For example, my PC (see its components in the image below) could generate code using deepseek-coder-6.7b-instruct Q4_O at a rate of about 4.5 tokens per second using 5.37GB of RAM and with deepseek-coder-6.7b-instruct Q8_O at a rate of about four tokens per second using 9.01GB of RAM. Some models become inaccessible without enough RAM, but this wasn’t an issue this time. Both models worked at a reasonable speed but it did feel like I had to wait for every generation. Having a dedicated GPU would make this waiting time shorter.

Your use case will determine the best model for you, along with the amount of RAM and processing power available and your goals.

Where SAL Goes Wrong, SVH Jumps In

Although the language models we tested vary in quality, they share many types of mistakes, which I’ve listed below. Not to worry, though: SVH can help you deal with them, since the platform notices the genAI errors instantly and suggests solutions. I’ve shown the suggestions SVH made in each case below.

Variable Naming Issues

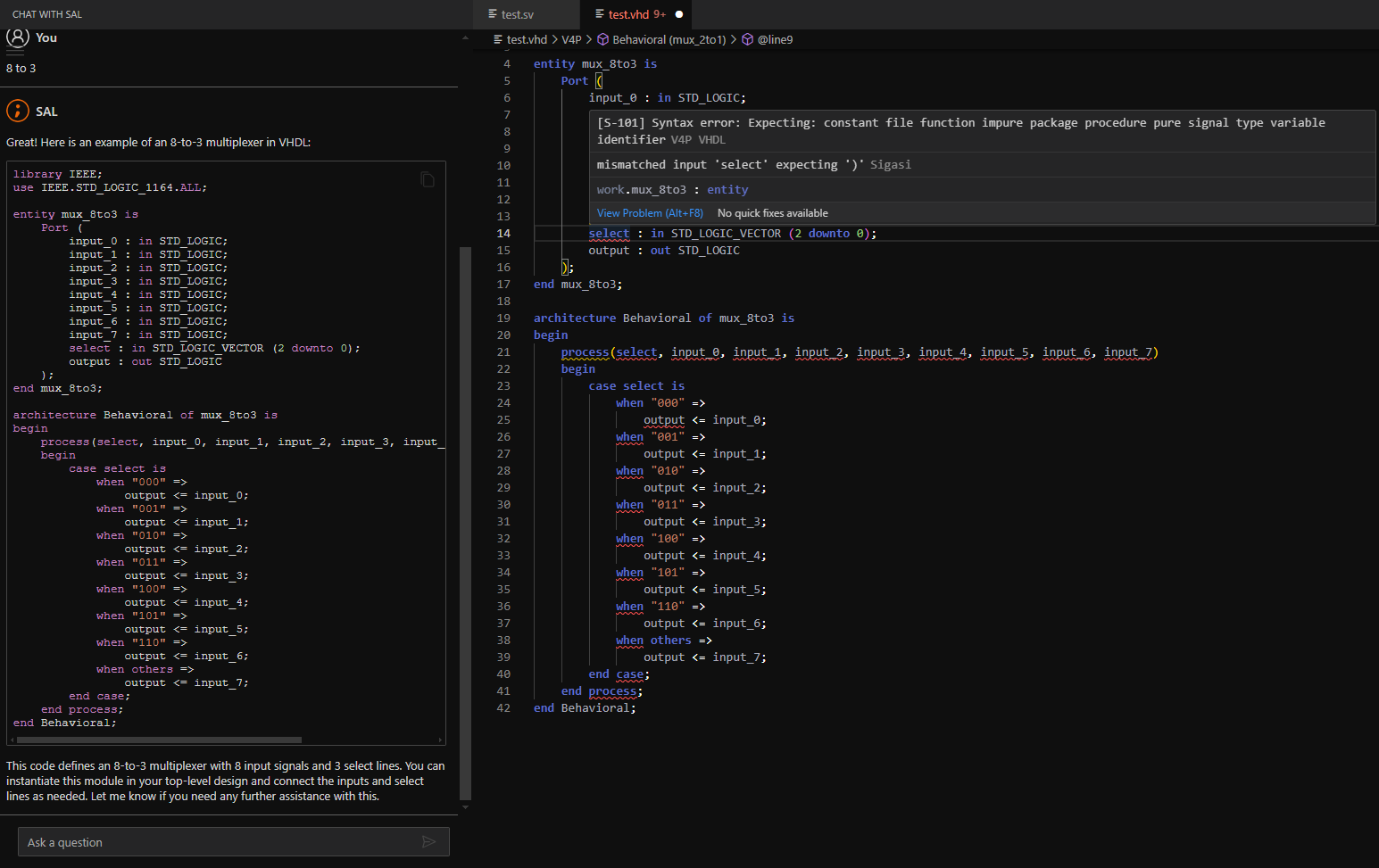

The models behind SAL sometimes choose inappropriate variable names. For example, naming an input of a MUX as select, which is a reserved keyword. SVH detects this and lets you fix it using a Quick Fix suggestion.

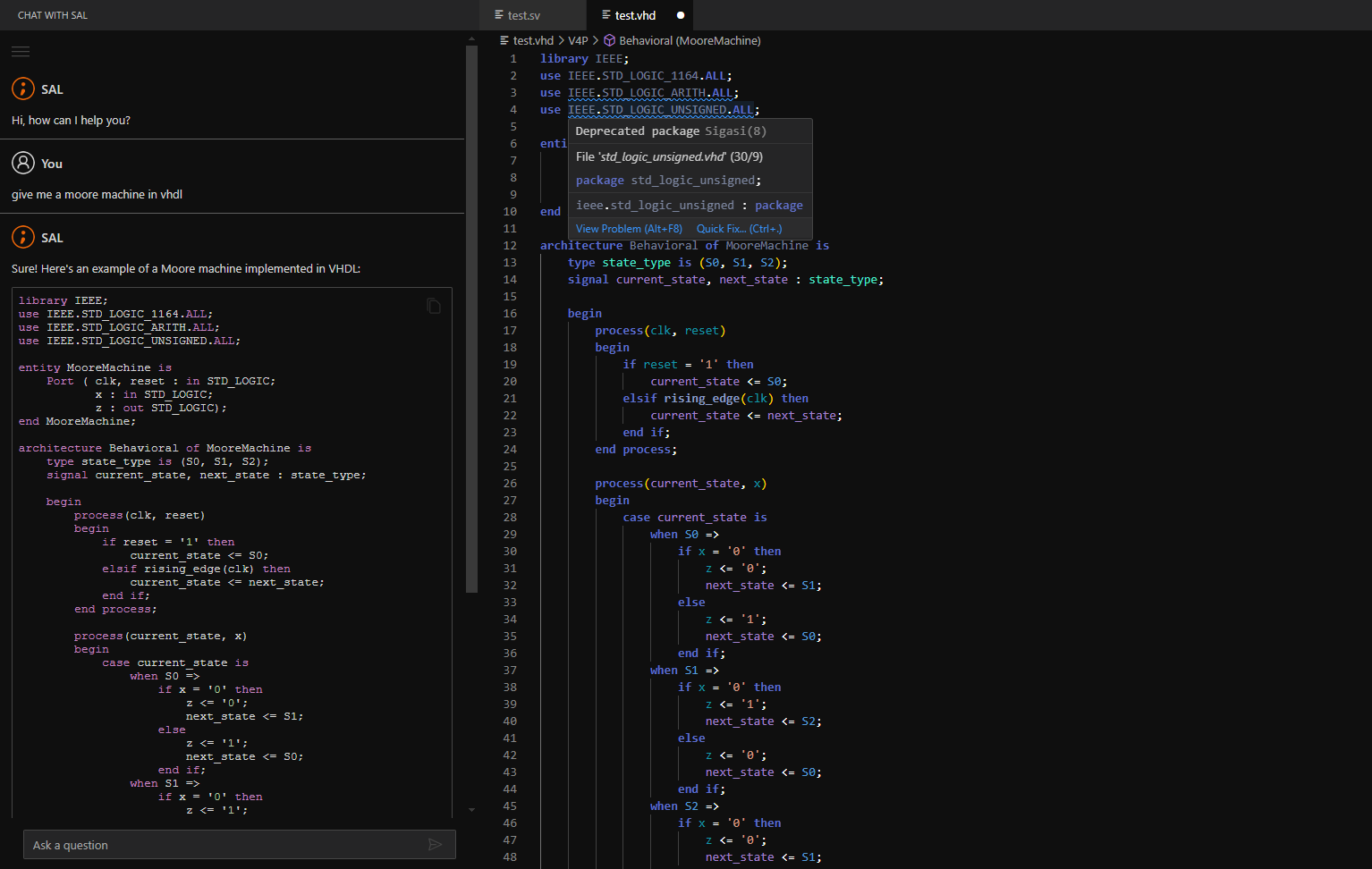

Deprecated Packages

Models might generate outdated code or packages. SVH identifies these instances and offers solutions via Quick Fixes.

Type Mismatches

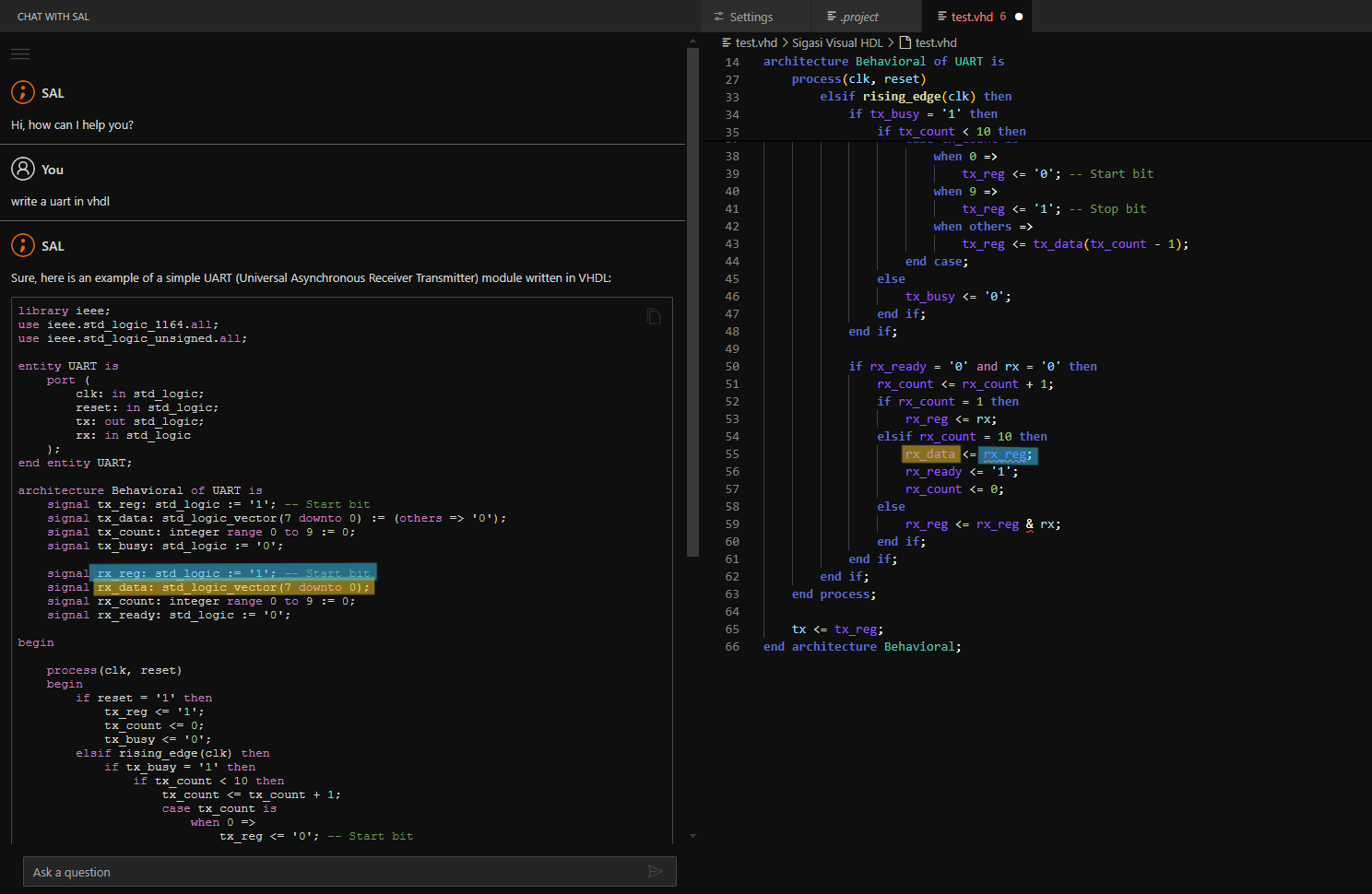

Sometimes, the models have problems determining variable types. In the example below, the std_logic type is assigned to signal rx_reg, yet std_logic_vector is assigned to the rx_data signal. SVH detects and proposes fixes for this kind of error.

Array Sizes

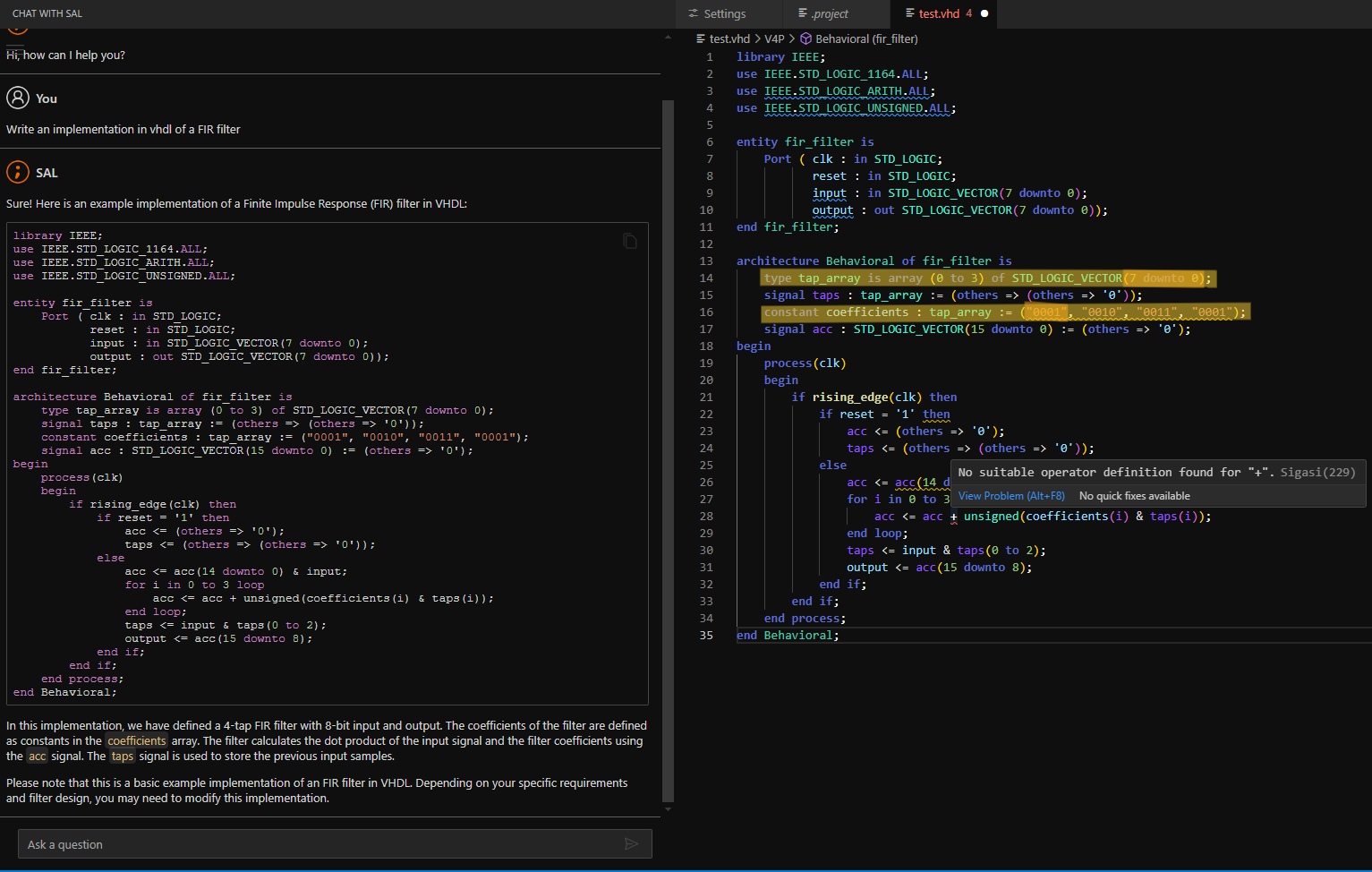

AI can also struggle with variable types when these variables have predetermined sizes. In the example below, SAL declares a type called tap_array, an array of 8-bit std_logic_vectors. However, when the constant coefficients is declared using this type two lines lower, SAL mistakenly assumes that the std_logic_vectors only have four bits. Luckily, SVH automatically warns us that this is a mistake.

Unused Signals

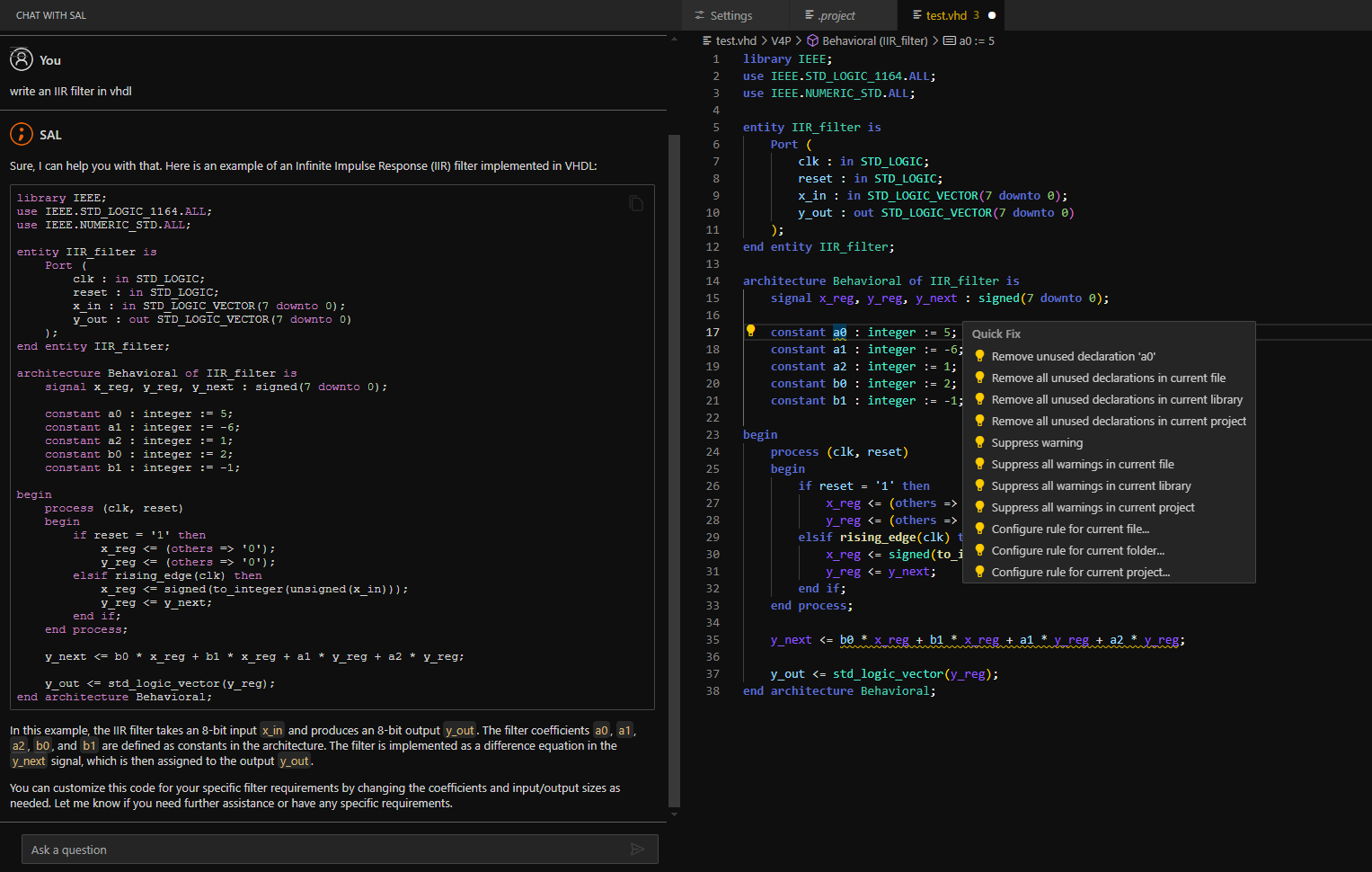

Occasionally, AI generates code with declared but unused signals. In the example below, one of the coefficients (a0) is declared but never actually used in the calculation. SVH highlights and helps resolve these issues.

Complex Questions

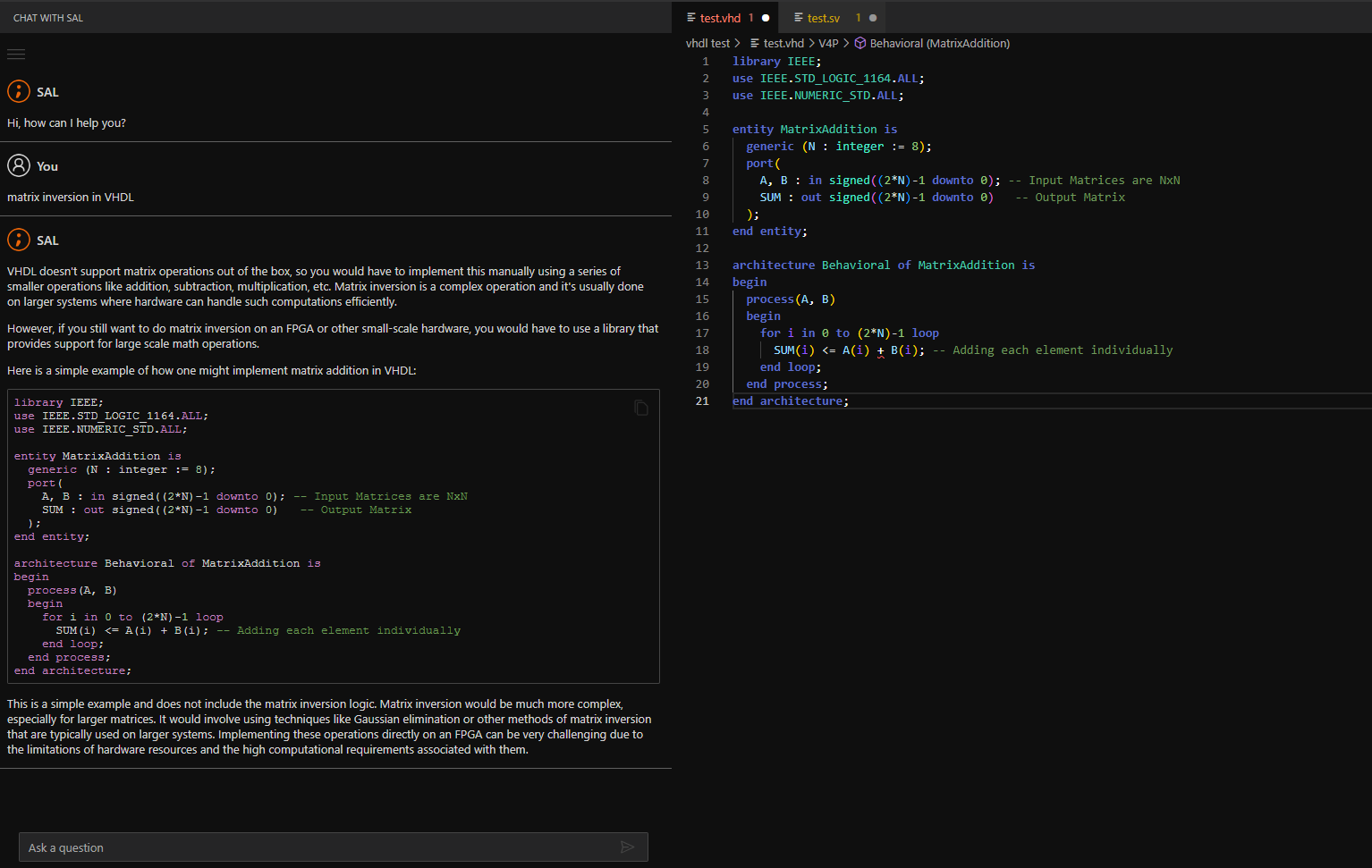

The model made multiple errors when asked to write VHDL code to find a matrix inverse. It generated code for adding matrices instead of finding the inverse, used incorrect array sizes, and performed incorrect operations for the data types. These issues highlight the limitations of AI models when pushed beyond their comfort zones. SVH’s excellent type-checking recognizes the mismatches.

Conclusion

Boilerplate and Templates

SAL excels at answering simple questions about code and generating relatively straightforward code. As such, it’s adept at generating boilerplate code, but it quickly gets into the problems described above whenever business logic is introduced. If all you want to do is write less boilerplate code, the best solution is to use tried-and-true templates that have been available in IDEs and text editors for years without any hardware requirements. SVH already includes a wide selection of built-in templates that seamlessly integrate into the editing process, ensuring correctness and allowing for swift customization of variable names while writing HDL code. Additionally, we will be greatly expanding the number of built-in templates in the next release, including templates for verification methodologies like UVM, OSVVM, VUnit, and UVVM.

Conclusion

While genAI models for HDL still suffer from many issues, SVH’s validation features significantly reduce the risks of using such generated code, ensuring higher quality and reliability. Meanwhile, SVH’s templates make genAI obsolete in many cases. If you do choose to use genAI, SAL allows you to easily switch between models, both local and remote. SVH and HDL generation tools work harmoniously, compensating for each other’s limitations.

See also

- Exploring GitHub Copilot (blog post)

- Sigasi's Software Development Kit Part 2 (legacy)

- Sigasi's Software Development Kit Part 1 (legacy)

- Context Sensitive Autocompletion (screencast)

- Using Custom Templates in Sigasi 2 (legacy)